Calamari is great console developed by the Inktank Team, but the process, building it, was a very difficult and laborious process. The good news is that now they are building binary packages and put them in a repository that make the calamari installation a lot easier. Although, the packages are in a dev stage the console is very usable. The repository can be found here:

http://download.ceph.com/calamari/

To set the repository run the following command:

echo "deb http://download.ceph.com/calamari/devel/trusty/ trusty main" > /etc/apt/sources.list.d/calamari.list

Then get the repository key:

wget -q -O- 'https://ceph.com/git/?p=ceph.git;a=blob_plain;f=keys/autobuild.asc' | sudo apt-key add -

And finally install the packages:

$ sudo apt-get install calamari-server calamari-clients

Run initialize

$ sudo calamari-ctl initialize

[INFO] Loading configuration..

[INFO] Starting/enabling salt...

[INFO] Starting/enabling postgres...

[INFO] Updating database...

[INFO] Initializing web interface...

[INFO] Starting/enabling services...

[INFO] Updating already connected nodes.

[INFO] Restarting services...

[INFO] Complete.

If you want to go through the process of building the packages for yourself, you can do it with the help of these guides:

http://ceph.com/calamari/docs/operations/server_install.html

https://ceph.com/category/calamari/

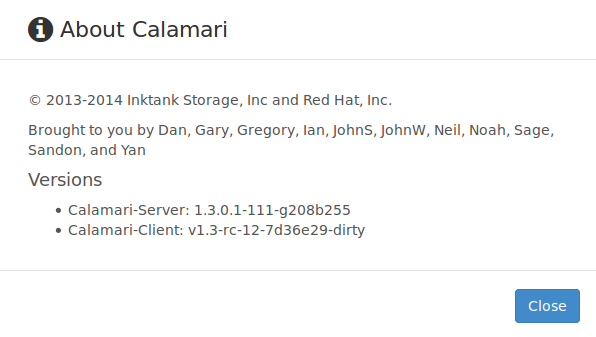

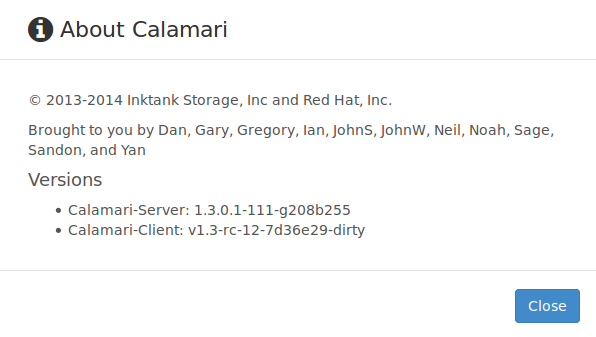

In fact, I have to built the calamari-server package from the sources because I couldn’t make it work in my server, the initialize process always finished with errors. I guess there was some bug in the binary package that was fixed in the sources. To save time to you, if you want to install the package I built, you can get it here: calamari-server_1.3.0.1-111-g208b255_amd64.deb

I the case that you built the packages from the sources or used the package I post here you should check this post too.

Calamari needs saltstack to run, but I faced issues with the last versions of saltstack, I guess calamari is only compatible with the branch 2014-7 of saltstack. To install this version you can add this repository:

echo "deb http://ppa.launchpad.net/saltstack/salt2014-7/ubuntu trusty main" > /etc/apt/sources.list.d/saltstack-salt-trusty.list

Install de repository key:

gpg --keyserver keyserver.ubuntu.com --recv-key 0E27C0A6 && gpg -a --export 0E27C0A6 | sudo apt-key add -

Or simply run this command to get the repository working on your machine:

$ sudo add-apt-repository ppa:saltstack/salt2014-7

Now you can install the salt-master and salt-minion if needed:

$ sudo apt-get install salt-master

You have to be sure that in your ceph nodes you have the same salt version that you have in your master:

$ sudo dpkg -l | grep salt

ii salt-common 2014.7.5+ds-1ubuntu1 all shared libraries that salt requires for all packages

ii salt-master 2014.7.5+ds-1ubuntu1 all remote manager to administer servers via salt

ii salt-minion 2014.7.5+ds-1ubuntu1 all client package for salt, the distributed remote execution system

You can check if the clamari services are working Ok:

$ sudo supervisorctl status

carbon-cache RUNNING pid 1088, uptime 23:19:02

cthulhu RUNNING pid 27734, uptime 0:15:00

If something is wrong you should check the /var/log/calamri directory