In this post, I am testing the IO limits for disks provided by KVM. Why should you configure the disk limits in your guests? If you have your KVM disks in a shared storage back-end, as I have, and you cannot control the operations inside your VPS, you should establish some limits for each disk. You should monitor your VPS IOPS load if you don’t want to suffer an overhead in your storage caused by one VPS consuming a lot of IOPS. One VPS can saturate the storage degrading too much the performance of the rest of VPS. This can be, because your VPS are administered by very different people/customers or because you don’t want that an operation you perform in one VPS (that needs high IOPS) can cause IOWAIT to other VPS.

I am using ISCSI with 10 SAS drives configured as RAID10 for my back-end storage. In this test I configured in the virtio disk 200 IOPS and 10MBs for max bandwidth.

The first tool I used to test the limits is hdparm inside the VPS. With hdparm I tested the max bandwidth I could get from the storage, because this tool performs a sequential access. Without the limits I got 156MB and with the disk limited I got the expected 10MB.

hdparm with no limits on the disk:

# hdparm -t /dev/vda

/dev/vda:

Timing buffered disk reads: 472 MB in 3.02 seconds = 156.36 MB/sec

hdparm with the disk limited to 10MBs:

# hdparm -t /dev/vda

/dev/vda:

Timing buffered disk reads: 32 MB in 3.02 seconds = 10.61 MB/sec

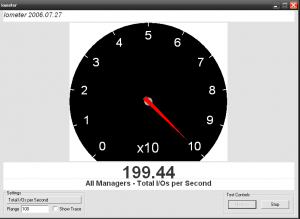

We can verify that the limits are perfectly applied. I used another tool, iometer, to verify the limits, because iometer has many configuration options to test them. To test the max bandwidth I configured a iometer test with a 256KB transfer request and 100% secuencial read access. With this iometer test I got a very similar value to hdparm with no limits on the disk:

And with the disk limited to 10MBs I got again the same value:

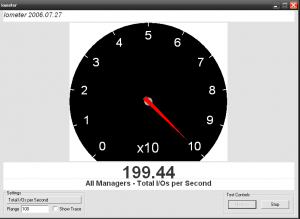

Ok, we know that the max bandwidth limits are applied perfectly well. I wanted to test too the KVM disk limited by IOPS instead of bandwidth. I configured the disk limited to 200IOPS and to test this limit I made a new iometer test, but this time with a 512B transfer rate and 100% sequential read access.

With the disk no limited and disk cache set to none I got about 1800 IOPS:

With the disk limited to 200 IOPS I got exactly 200IOPS avg:

The IO limits are applied perfectly well for the VPS and another very important point: These limits are applied regardless of the disk cache mode used. If you use writeback cache mode, you can get many more IOPS in the guest than if you use cache mode set to none, but if you set IO limits on the disk you will get exactly the same IOPS in the guest. Ok, I use cache mode set to none for almost all VPS, so what limits should I configure to prevent abusive use of the storage?

Well, this is very dependant on the workload, but for me, the most painful IO operations I suffered, are from those that demand very sequential access, like creating a large tar.gz, making backups or the process of booting the VPS.

I want to limit the guest IOPS, but without affecting to much the performance of the guest. To archive this I configure 10MB for max bandwidth (reading and writing), 200IOPS for reading and 180IOPS for writing. After some time using these limits, I guess the VPS are hiting the bandwidth limit time to time. For example the VPS boot process lasts a bit longer, but after booting the VPS have an acceptable performance and the entire storage performance are better managed.